Logging is a critical component in Flask applications, providing developers with insights into application behavior, errors, and performance.

As a developer, grasping the fundamentals of logging systems is essential, especially when working with web applications because it helps to identify and solve errors that occur in application by monitoring the code’s execution as if like following a breadcrumb trail.

It also minimizes Mean time to detection (MTTD) and mean time to resolution (MTTR) through automated alerts, historical data analysis, real-time monitoring, etc.

In this comprehensive guide, we'll dive deep into Flask logging, covering everything from basic setup to advanced techniques. You'll learn:

- How to set up and configure logging in your Flask apps

- Best practices for structuring and formatting your logs

- Techniques for handling sensitive information and high log volumes

- Ways to centralize your logs for easier analysis

Whether you're troubleshooting a tricky bug or monitoring your app's performance, the insights you gain from proper logging can be invaluable. Let's unlock the power of Flask logging and take your development skills to the next level.

Logging with Flask

Prerequisites

Before we dive into Flask logging, ensure you have the following set up:

A recent version of Python installed on your machine. You can download it from the official Python site.

Flask installed on your system. Install it using pip:

pip install flaskA new working directory for this project:

mkdir flasklog cd flasklogCreate a new file

app.pyfor your flask application in this directory:code app.py

With these prerequisites in place, you're ready to explore Flask logging

Basic Usage

Set up a Simple Logger in Flask

Import Flask into the application app.py as:

from flask import Flask

Flask is a class provided by the flask framework and is imported into our application.

It’s a central component used to create an instance of a Flask web application app.py as:

app = Flask(__name__)

What is an instance? An instance in brief is a single, unique object created from a class, in this context, we define an instance app of the class Flask.

In addition to this three routes (/, /info, and /warning) are defined in our application app.py which when accessed invokes their respective functions for returning different strings. These routes can be invoked via logging calls (info(), warning()) in the respective functions.

# Logging Call

app.logger.info("An info message!")

Let’s break down a logging call,

app: an instance of our application app.py

logger: is an attribute of app object. This attribute is used for creating log entries by providing various methods to log messages at different severity levels.

info(): is a method of the logger object that logs a message with a severity level of INFO

We’ll explore the severity of logging levels in detail further in this article.

So, when you do a logging call the entire process looks like this:

- “An info message” string is created to be logged

loggerobject associated with theappinstance is accessedinfo()method on theloggerobject is called with the message string- A log record includes the message, log levels, and other metadata such as timestamp, module date, etc.

- The log record is dispatched to any handlers configured in the logging setup.

A Handler defines where and how log messages are output (e.g., console, file, email).

Let’s take an example,

from flask import Flask

# Create A Flask Application Instance

app = Flask(__name__)

# Route To Handle Requests To The Root URL "/"

@app.route("/")

def hello():

# A Simple Greeting Message Is Returned

return "Greetings!"

# Route to handle requests to "/info"

@app.route("/info")

def info():

# Log An Information Message

app.logger.info("An info message.")

# Return A Message Indicating Info Was Logged

return "Info message"

# Route To Handle Requests To "/warning"

@app.route("/warning")

def warning():

# Log A Warning Message

app.logger.warning("A warning message.")

# Return A Message Indicating A Warning Was Logged

return "Warning message"

app instance is used for handling HTTP requests wherein app.logger object is then used to make logging calls using methods like info() and warning().

We’ll learn more about handlers further in this article.

Type flask run command to run the code and see the output in the main server terminal:

* Debug mode: off

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

*

Running on http://127.0.0.1:5000

Press CTRL+C to quit

127.0.0.1 - - [10/Jul/2024 16:13:08] "GET / HTTP/1.1" 200 -

Select the provided hyperlink in the console, it’ll direct you to your web browser.

Now we defined three routes in our application app.py but it only shows the string message returned by the function def Hello():, why is that?

What we want the user to see on the web is decided by routes wherein each message is supposed to have its unique route via a decorator. In order to view messages on different routes you can always add a route in the url of the website. For example: in order to access the /warning route change the URL of your web application to http://127.0.0.1:5000/warning.

In the main server terminal, you’ll see

127.0.0.1 - - [10/Jul/2024 16:13:08] "GET / HTTP/1.1" 200 -

[2024-07-10 16:22:32,112] WARNING in app: A warning message.

127.0.0.1 - - [10/Jul/2024 16:22:32] "GET /warning HTTP/1.1" 200 -

127.0.0.1 - - [10/Jul/2024 16:22:44] "GET / HTTP/1.1" 200 -

Similarly view the info message on your browser and come back to the main server terminal:

127.0.0.1 - - [10/Jul/2024 16:27:42] "GET /info HTTP/1.1" 200 -

However, if you visit the /info route, in the main server terminal you will observe, unlike the warning message, "An info message." message isn't logged as expected. That's because Flask ignores messages with a log level lower than WARNING by default, but we can customize this behavior for our web application’s requirements before we get there, we need to have a basic understanding of handlers and formatters.

Handlers and Formatters

Two essential components of logging configuration are Handlers and Formatters, which play distinct roles in how log messages are processed and formatted.

Formatter

A Formatter determines the structural layout of log messages before they are emitted by a Handler. It allows customization of how log records are formatted, including adding timestamps, log levels, module names, and the actual message content in a specified order and format. Formatting a log record can help in maintaining and easy parsing of the log records for various purposes.

We can add a custom formatter in our app.py using the following code:

formatter = logging.Formatter('[%(asctime)s] %(levelname)s - %(message)s')

Here,

[%(asctime)s] : is a placeholder that will be replaced by the current time when the log message is created. The Square brackets [] specify literal characters in string format to separate timestamps from different parts of the log message.

%(levelame)s: is also a placeholder that will be replaced by the log level of the message (e.g., DEBUG, INFO, WARNING, etc.).

%(message)s: this is a placeholder that going to be replaced by the actual log message content that you provide when logging.

The expected output would be:

[2024-07-11 12:24:16,417] INFO: An info message.

Handler

A handler defines where and how log messages should be output. They are also responsible for storing, displaying as well as sending log records (messages) to specified destinations.

In our previous code, you would’ve noticed that we didn’t add any handler to it then how are the log messages recorded and displayed on the console?

If no handlers are explicitly added to the app.logger, Flask will add a default handler to ensure that log messages are output to the console.

We can configure a handler as:

# Configure A Console Handler With A Custom Formatter

# Create a StreamHandler to handle console output

handler = logging.StreamHandler()

# Define A Custom Log Message Format

formatter = logging.Formatter('[%(asctime)s] %(levelname)s in %(module)s: %(message)s')

# Assign The Formatter To The Handler

handler.setFormatter(formatter)

Here, a StreamHandler instance is created to send logs to the console, the formatter instance is defined with a specific format and then the formatter is associated with the handler using the setFormatter() method to ensure that log messages emitted by this handler will be formatted according to the specified format.

There are various types of handlers:

StreamHandler: Sends log messages to the console (sys.stdoutorsys.stderr).handler = logging.StreamHandler()FileHandler: Writes log messages to a specified fileapp.logon diskhandler = logging.FileHandler('app.log')HTTPHandler: Sends log messages to an HTTP endpoint.# Set up the HTTP handler http_handler = HTTPHandler( host='localhost:5001', url='/log', method='POST', ) http_handler.setLevel(logging.ERROR) app.logger.addHandler(http_handler)

Note: In the context of logging, python's built-in logging module uses the term handler, while Loguru uses the term sink.

Now, back to our app.py wherein we wanted to customize our web application’s behavior by overriding default logger configurations.

Configure a Logger

In the Set up a simple logger section we saw how when we accessed the /info route we did not see the info because by default Flask logger ignores messages with a log level lower than WARNING and one way, we can get around it is by overriding default configurations.

To override default configurations, we can use logging.config.dictConfig() method to our existing code in app.py

from flask import Flask

from logging.config import dictConfig

# Configure logging using dictConfig

dictConfig(

{

# Specify the logging configuration version

"version": 1,

"formatters": {

# Define a formatter named 'default'

"default": {

# Specify log message format

"format": "[%(asctime)s] %(levelname)s in %(module)s: %(message)s",

}

},

"handlers": {

# Define a console handler configuration

"console": {

# Use StreamHandler to log to stdout

"class": "logging.StreamHandler",

"stream": "ext://sys.stdout",

# Use 'default' formatter for this handler

"formatter": "default",

}

},

# Configure the root logger

"root": {

# Set root logger level to DEBUG

"level": "DEBUG",

# Attach 'console' handler to the root logger

"handlers": ["console"]},

}

)

app = Flask(__name__)

dictConfig is used to configure the logging system in Python with a dictionary that defines the configuration details. The dictionary can specify formatters, handlers, loggers, and other logging settings. This configuration dictionary contains the following key elements:

- Version Key: represents the schema version, which is 1 in this code

- Formatter Key: Specifies formatting patterns of log records. Here we are using a default formatter to format log records as

"format": "[%(asctime)s] %(levelname)s in %(module)s: %(message)s" - Handler Key: Different handlers are created for your logger. Here in this code,

StreamHandleris used to push log records to the console. - Root Key: Configurations for the root logger such as level, handlers, etc. are specified.

Note that these configurations are to be added before the app instance is created otherwise the app.logger will create a default handler instead.

Run the file app.py using flask run to see the following output in the main server terminal:

[2024-07-10 16:38:20,129] INFO in app: An info message

[2024-07-10 16:38:20,129] WARNING in app: A warning message

[2024-07-10 16:38:20,129] ERROR in app: An error message

[2024-07-10 16:38:20,129] CRITICAL in app: A critical message

[2024-07-10 16:38:20,129] INFO in _internal: 127.0.0.1 - - [10/Jul/2024 16:38:20] "GET / HTTP/1.1" 200 -

[2024-07-10 16:38:34,791] DEBUG in app: A debug message

Note: Use CTRL + C to stop the server.

Beyond the Basics of Flask Logging

Log Level Severity

Log level severity in logging refers to the categorization of log messages based on their importance and urgency. Log levels, in increasing order of severity, are:

DEBUG < INFO < WARNING < ERROR < CRITICAL

In web applications, log-level severity is essential for efficiently managing and interpreting log data.

Web applications can prioritize responses, streamline troubleshooting, and maintain a clear and organized log file.

Each of these log levels has a corresponding method, which allows you to send log entries with that log level. For instance:

@app.route("/")

def hello():

# Log a messages at different severity levels using the Flask app's logger

app.logger.debug("A debug message")

app.logger.info("An info message")

app.logger.warning("A warning message")

app.logger.error("An error message")

app.logger.critical("A critical message")

# Return a simple "Hello, World!" response

return "Hello, World!"

Now, run the file again and observe the following output:

[2024-07-10 16:34:49,121] WARNING in app: A warning message

[2024-07-10 16:34:49,134] ERROR in app: An error message

[2024-07-10 16:34:49,134] CRITICAL in app: A critical message

However, when you run this code, only messages with a log level higher than INFO will be logged. That is because you haven't configured this logger yet, which means Flask will use the default configurations leading to the dropping of the DEBUG and INFO messages.

But what if we want to include info and debug? Then we’ll have to change the default configurations of the said logger just like we did in the previous section.

Let’s also take an example to see how we can catch and log errors in our code:

# Define a route '/error' endpoint

@app.route('/error')

def error():

try:

# ZeroDivisionError

1 / 0

except Exception as e:

# Log the error using the Flask app's logger

app.logger.error('An error occurred: %s', e)

# Return an error message with status code 500

return "An error occurred!", 500

In this example, the /error route intentionally triggers a ZeroDivisionError by trying to divide 1 by 0. To manage this error, the code uses a try-except block. If any error, like the division by zero, occurs during execution, it's caught immediately.

The app.logger.error('An error occurred: %s', e) line logs the specific error message for developers to review later. To let users know something went wrong, the route responds with "An error occurred!" and sends a status code 500 (Internal Server Error).

Make sure to include the error level in the configuration.

"root": {"level": "ERROR", "handlers": ["console"]}

After running the code, you’ll see the following output:

[2024-07-11 21:10:48,801] ERROR in app: An error occurred: division by zero

Visit http://127.0.0.1:5000/errorto see the error message returned by the error() function displayed on your web browser, indicating successful logging.

Multiple Loggers

Web applications require many loggers in order to handle different message types and route them to the appropriate locations. They assist in classifying log entries according to significance. Additionally, distinct loggers prevent logs from being mixed up in different parts of your app. In online apps, this independence is crucial. It is possible for multiple components to operate simultaneously. To ensure that there is no confusion, each can have its own logging configuration.

Consider the following code, for example.

from flask import Flask

from logging.config import dictConfig

import logging

# Configure logging using dictConfig

dictConfig(

{

# Specify the logging configuration version

"version": 1,

"formatters": {

# Define a formatter named 'default'

"default": {

"format": "[%(asctime)s] %(levelname)s in %(module)s: %(message)s",

}

},

"handlers": {

# Define a console handler configuration

"console": {

# Use StreamHandler to log to stdout

"class": "logging.StreamHandler",

# Log to standard output

"stream": "ext://sys.stdout",

# Use 'default' formatter for this handler

"formatter": "default",

}

},

# Configure the root logger

"root": {

"level": "ERROR",

# Attach 'console' handler to the root logger

"handlers": ["console"],

},

"loggers": {

# Configure a specific logger named 'root2'

"root2": {

"level": "INFO",

# Attach 'console' handler to this logger

"handlers": ["console"],

# Do not propagate messages to parent loggers

"propagate": False,

}

},

}

)

# Get logger instances for 'root' and 'root2' loggers

root1 = logging.getLogger("root")

root2 = logging.getLogger("root2")

app = Flask(__name__)

@app.route("/")

def hello():

# Log messages using 'root1' logger

root1.debug("A debug message")

root1.info("An info message")

root1.warning("A warning message")

root1.error("An error message")

root1.critical("A critical message")

# Log messages using 'root2' logger

root2.debug("A debug message")

root2.info("An info message")

root2.warning("A warning message")

root2.error("An error message")

root2.critical("A critical message")

return "Hello, World!"

# Run the Flask application if executed directly

if __name__ == '__main__':

app.run(debug=True)

Here, in the configuration code, we have added a root and a logger key to our dictConfig dictionary.

The loggers section is where we define additional named loggers, like root2 in this example. These loggers can be customized with settings such as different logging levels, handlers, and how they propagate messages independently of the root logger's setup.

root1 isn't included in the loggers section because it represents the fundamental logger itself, managed separately within logging configurations. This section is specifically used for setting up extra named loggers beyond the root logger, and tailoring logging behaviors as needed.

These loggers are defined as:

root1 = logging.getLogger("root") retrieves or creates a logger named root. root2 = logging.getLogger("root2") defines another logger named root2 with its configuration separate from root. This separation allows for independent logging settings, such as different log levels or output destinations.

The main difference between these two loggers lies in their configuration and how they are defined, wherein root2 is explicitly defined in the logging configuration (dictConfig) under "loggers", specifying its log level (INFO), handlers (in this case, "console"), and propagation settings (False to prevent messages from propagating to ancestor loggers). root1, although not explicitly configured in the dictConfig, is implicitly managed by default settings of the root logger, which in this configuration directs messages of level ERROR and above to the console handler.

This approach ensures that root1 and root2 can be independently configured and used throughout the application, offering control over logging behaviors.

Output:

* Running on http://127.0.0.1:5000

[2024-07-12 00:10:12,413] INFO in _internal: Press CTRL+C to quit

[2024-07-12 00:10:15,472] DEBUG in app: A root debug message

[2024-07-12 00:10:15,473] INFO in app: A root info message

[2024-07-12 00:10:15,473] WARNING in app: A root warning message

[2024-07-12 00:10:15,473] ERROR in app: A root error message

[2024-07-12 00:10:15,473] CRITICAL in app: A root critical message

[2024-07-12 00:10:15,473] INFO in app: An extra info message

[2024-07-12 00:10:15,474] WARNING in app: An extra warning message

[2024-07-12 00:10:15,474] ERROR in app: An extra error message

[2024-07-12 00:10:15,474] CRITICAL in app: An extra critical message

Notice here how the debug message for the extra logger isn’t displayed, this is because we set the level in the configuration code as INFO. To see debug messages for the extra logger you can change it to DEBUG like:

"root2": {

"level": "DEBUG", # This was INFO earlier

"handlers": ["console"],

"propagate": False,

}

Customizing Log Format with Subclassing logging.Formatter

You must be wondering, we just read about formatting log records using logging.formatter how is this any different?

Default logging outputs messages with a WARNING severity level to the console, it’s adequate for basic uses such as including timestamp, severity level and message string. Web applications often require logs to contain specific information such as user IDs, request paths, session data, or custom tags. For this purpose, we require a custom formatter that can customize the formatting logic beyond the default capabilities, giving you more control over the log message format.

By subclassing logging.Formatter we can define our custom logic for formatting log messages.

Subclassing logging.Formatter implies that we create a new class that inherits logging.formatter and overrides its methods (mostly it’s format() method) in order to customize it. This way we have complete control over the formatting process in a way that suits our web application’s needs and style.

Let’s see an example,

import logging

# Define a custom logging formatter class

class CustomFormatter(logging.Formatter):

# Override the format method to customize log message formatting

def format(self, record):

log_message = f"{record.levelname} - {record.funcName} at line {record.lineno}: {record.getMessage()}"

return log_message

# Get logger instance for the current module

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

handler = logging.StreamHandler()

handler.setFormatter(CustomFormatter())

logger.addHandler(handler)

logger.info('This is an info message.')

A new class CustomFormatter is defined and inherits from logging.formatter to override its methods for creating a custom format via subclassing.

Here, format(self, record) method is overridden to define a custom log format which includes:

record.levelnamefor displaying log level.record.funcNamefor displaying the function from which the logging call has been made.record.linenofor including the exact line in the code at which the logging call was made.record.getMessage()for displaying the log message.

Then a logger with the current module is configured using logger.getLogger(__name__), where __name__ is the name of the current module.

Using the setLevel() method we have set the level as INFO for recording messages with INFO level severity. After the handler is configured properly, we log the required message.

INFO - <module> at line 15: This is an info message.

You can also change the format to include timestamps, level and message as:

log_message = f"{record.created}: {record.levelname}: {record.getMessage()}"

We get the following output:

1720761497.993521: INFO: This is an info message.

Best Practices for Flask Logging

Ensuring Sensitive Information is Not Logged

Logging Filters

Logging filters allow us to selectively discard log messages based on predefined criteria, ensuring that sensitive data remains protected and compliant with security standards. This is useful in web applications for preventing the exposure of confidential information, maintaining user privacy, and reducing the risk of data breaches.

Let’s see how we can employ this functionality in our code:

class RedactingFilter(logging.Filter): # Override the filter method to check and redact sensitive information def filter(self, record): sensitive_info = ["password", "token", "secret"] for word in sensitive_info: if word in record.getMessage(): return False return True # Configure logger with redacting filter logger = logging.getLogger(__name__) logger.setLevel(logging.DEBUG) handler = logging.StreamHandler() handler.setLevel(logging.DEBUG) handler.addFilter(RedactingFilter()) formatter = logging.Formatter("[%(asctime)s] %(levelname)s in %(module)s: %(message)s") handler.setFormatter(formatter) logger.addHandler(handler) # Example usage logger.info("Processing request with sensitive information: password=123456") logger.info("Authentication successful. token: abcdef1234567890") logger.info("Received secret document from user.") logger.info("This message will be logged")We create a custom filter class named

RedactingFilterthat inherits from thelogging.filterin order to override thefilter(self, record)method to define the filtering logic.A list

sensitive_infois defined such that it includes all the words that if contained in any statements should not appear in log records.If the current sensitive word matches from the list, the function returns false, and the log message will not be displayed. On the contrary, if it doesn’t match the log message will be displayed.

Output:

[2024-07-12 11:23:33,839] INFO in app: This message will be loggedNotice how only the log record with none of the words in the

sensitive_infois displayed.Replace Method

What if the statement contains a word from the list of sensitive words but we still want it to be displayed in our log records all the while maintaining user privacy and security? For this purpose, we can use the replace method to simply replace the actual password with some symbol.

app = Flask(__name__) # Define a custom logging filter class SensitiveDataFilter(logging.Filter): def filter(self, record): # Example of sensitive information patterns to replace sensitive_info_ = ['password', 'credit_card', 'ssn'] # Replace sensitive patterns in log message for pattern in sensitive_info: record.msg = record.msg.replace(pattern, '*FILTERED*') return True # Configure Flask logger logger = logging.getLogger(__name__) logger.setLevel(logging.INFO) # Add a stream handler to log to console handler = logging.StreamHandler() handler.setLevel(logging.INFO) # Apply the sensitive data filter to the handler handler.addFilter(SensitiveDataFilter()) # Create a formatter and add it to the handler formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s') handler.setFormatter(formatter) # Add the handler to the logger logger.addHandler(handler) # Example route that logs sensitive information @app.route('/') def index(): # Simulating sensitive information being logged logger.info("Sensitive info: password=%s, credit_card=%s", request.args.get('password'), request.args.get('credit_card')) return "Logged sensitive information." if __name__ == '__main__': app.run(debug=True)Here, we’ve used

record.msg.replace(pattern, '*FILTERED*')method to replace sensitive information with*FILTERED*.Output in main server terminal:

2024-07-12 11:51:41,373 - INFO - Sensitive info: *FILTERED*=None, *FILTERED*=None

Regularly Reviewing and Pruning Log Files

Regularly reviewing and pruning log files is indispensable in web-based applications, especially in Flask, for practical and security-focused reasons. Web applications handle a constant flow of user interactions and transactions, generating extensive logs that may inadvertently capture sensitive data such as user credentials or payment information. By regularly reviewing logs, developers can promptly identify and remove such sensitive information, reducing the risk of data breaches and ensuring compliance with data protection regulations.

This purpose can be fulfilled using the concept of rotation and retention. Let’s take an example:

from flask import Flask, request

import logging

from logging.handlers import TimedRotatingFileHandler

app = Flask(__name__)

# Configure logging

LOG_FILE = 'app.log'

# Create a timed rotating file handler to manage log files

handler = TimedRotatingFileHandler(LOG_FILE, when='midnight', interval=1, backupCount=7)

handler.setLevel(logging.INFO)

# Create a formatter and add it to the handler

formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

# Add the handler to the root logger

logging.getLogger().addHandler(handler)

@app.route('/')

def index():

# Simulate logging sensitive information

password = request.args.get('password')

credit_card = request.args.get('credit_card')

logging.info(f"Sensitive info: password={password}, credit_card={credit_card}")

return "Logged sensitive information."

if __name__ == '__main__':

app.run(debug=True)

We use the TimedRotatingFileHandler from Python's logging.handlers module which allows us to rotate log files based on specified intervals and retain a certain number of backup log files or in this case app.log. Each day, a new log file is created, and older files are rotated and retained based on the specified configuration (backupCount=7).

TimedRotatingFileHandler() has the following elements:

LOG_FILE: the specified file that will store all the log records.when='midnight': Specifies when log rotation should occur based on time intervals. Settingwhen='midnight'means log files will rotate at midnight each day. You can also change this to'S' (second),'M' (minute),'H' (hour),'D' (day),'W0'through'W6' (weekday),'midnight', and'mon'through'sun'for Monday through Sunday rotations.interval=1: Defines the time interval for log rotation. For example,interval=1combined withwhen='midnight'rotates logs daily.backupCount=7: Specifies the number of backup log files to retain after rotation. In this case,backupCount=7ensures that up to 7 previous days' log files are kept before being overwritten.

Easy Parsing Using structlog

In Flask applications, structuring log messages also ensures that logs are not only human-readable but also machine-parseable, facilitating easier analysis and monitoring.

While formatters provide a basic structure, structured logging goes further by logging data in a structured format like JSON or key-value pairs. We can enhance log readability and allows for easier parsing and analysis using log management tools.

Before we can start logging with structlog, you must download the package with the following command as:

python -m pip install structlog

structlog provides a pre-configured logger by default, which you access by invoking the structlog.get_logger() method.

# import structlog library for structured logging

import structlog

# get a logger instance from structlog

logger = structlog.get_logger()

# log an info message using structlog

logger.info("Logging with structlog")

Let’s see how we can log data in a structured format such as JSON:

import structlog

import logging

from flask import Flask

# Configure structlog to format log entries as JSON

structlog.configure(

processors=[

# Add log level information

structlog.stdlib.add_log_level,

# Add timestamp in ISO format

structlog.processors.TimeStamper(fmt="iso"),

# Render log entries as JSON

structlog.processors.JSONRenderer()

],

# Use a dictionary as the context class

context_class=dict,

# Use structlog's logger factory

logger_factory=structlog.stdlib.LoggerFactory(),

# Use structlog's BoundLogger for logger wrapping

wrapper_class=structlog.stdlib.BoundLogger,

# Cache the logger on first use for efficiency

cache_logger_on_first_use=True,

)

# Configure the root logger to use structlog

logging.basicConfig(level=logging.INFO)

# Create a Flask application instance

app = Flask(__name__)

@app.route('/')

def index():

# Get a logger instance from structlog

logger = structlog.get_logger()

# Log an info message in JSON format

logger.info("Log entry in JSON format", process_id=12345)

# Return a simple HTTP response

return "Hello, World!"

if __name__ == '__main__':

# Run the Flask application in debug mode if executed directly

app.run(debug=True)

We configure structlog for handling log entries in JSON format by first setting up a series of processors—functions that enhance each log entry. These processors include:

add_log_level, which appends the log level (INFO) to each entry.TimeStamperfor adding a timestamp inISOformat.JSONRendererto format the log entry itself asJSON.

Then we define dict as the context_class to ensure that log entries maintain a structured format using dictionaries for contextual information. structlog.stdlib.LoggerFactory() is used as the logger factory, ensuring compatibility with Python's standard logging mechanisms, and structlog.stdlib.BoundLogger is used as the wrapper class to enhance logger instances with additional functionalities. cache_logger_on_first_use=True is then enabled to optimize performance by caching loggers upon their initial use.

Access the / route using flask run

Notice how the function logs an INFO-level message using logger.info("Log entry in JSON format", process_id=12345), demonstrating how additional context, such as a process ID, can be included in log entries as follows:

INFO:app:{"process_id": 12345, "event": "Log entry in JSON format", "level": "info", "timestamp": "2024-07-12T10:27:34.026154Z"}

Common Errors and Troubleshooting

Logs Not Appearing

Sometimes, logs may not appear in the expected output, which can hinder debugging efforts.

- Check Logging Configuration: Ensure that your logging configuration in Flask (

app.logger) is correctly set up. - Logging Level: Ensure that the logging level (

app.logger.setLevel()) is appropriately set. If it's set higher than the level/severity of the log messages being generated, those messages might not appear in the logs at all. - Handlers and Formatters: Double-check that all necessary handlers (like

StreamHandler,FileHandler, etc.) are added to the Flask loggerapp.logger.addHandler(). Also, make sure that formatters are correctly configured to format the logs as desired.

Handling High Log Volume Efficiently

- Log Rotation: Implement log rotation to manage log file size. This involves configuring handlers (e.g.,

RotatingFileHandlerorTimedRotatingFileHandler) that automatically rotate log files based on size or time intervals. - Asynchronous Logging: Use asynchronous logging handlers (

AsyncHandler) to offload the logging work to separate threads or processes. This can prevent blocking of the main application when logging. - Log Levels: Adjust log levels dynamically if your logging framework supports it. For example, you might log at a higher level during normal operations and switch to a lower level (like

ERRORorCRITICAL) during peak traffic to reduce noise.

Centralizing Your Logs in the SigNoz Cloud

Create a python virtual environment venv using the following command:

python -m venv venv

By now your current file directory should look like:

flasklog/

├── venv/

│ ├── include/

│ ├── lib/

│ ├── scripts/

│ └── pyvenv.cfg

└── app.py

Activate virtual environment using the following command:

.\venv\Scripts\Activate

If you run into an error while activating the virtual environment change the execution policy for the current user to allow local scripts and scripts signed by a trusted publisher using the following command:

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

Once you’ve successfully activated the virtual environment you should see the following as your directory path in the PowerShell terminal:

(venv) PS C:\Users\Desktop\SigNoz\flasklog>

Set the environment variables correctly in your PowerShell terminal, and insert your access token on line 3 as shown:

$env:OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED="true"

$env:OTEL_EXPORTER_OTLP_ENDPOINT="https://ingest.in.signoz.cloud:443"

$env:OTEL_EXPORTER_OTLP_HEADERS="signoz-ingestion-key=INSERT_YOUR_ACCESS_TOKEN_HERE"

$env:OTEL_LOG_LEVEL="debug"

Then, run the following command to run your flask application with Open Telemetry Instrumentation.

opentelemetry-instrument --traces_exporter otlp --metrics_exporter otlp --logs_exporter otlp python app.py

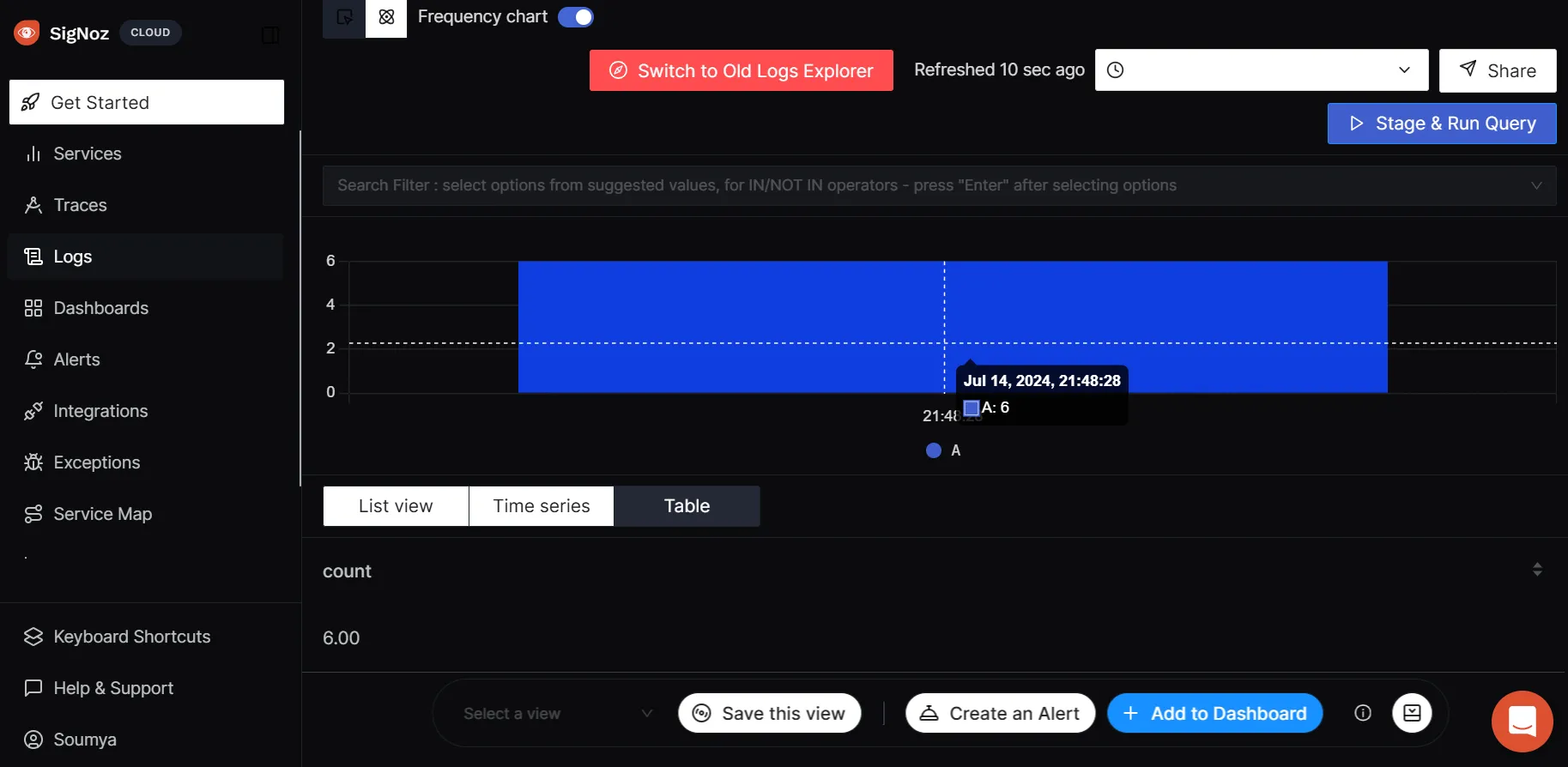

Go to 127.0.0.1:5000 where the returned string message will be displayed. Once you do that, you’ll see the following as an output in the terminal:

Starting Flask app

* Serving Flask app 'app'

* Debug mode: on

Starting Flask app

* Debugger is active!

* Debugger PIN: 313-027-765

{"process_id": 12346, "event": "Log entry in JSON format", "level": "warning", "timestamp": "2024-07-14T16:18:00.867940Z"}

{"process_id": 12347, "event": "Log entry in JSON format", "level": "error", "timestamp": "2024-07-14T16:18:00.868979Z"}

{"process_id": 12348, "event": "Log entry in JSON format", "level": "critical", "timestamp": "2024-07-14T16:18:00.868979Z"}

127.0.0.1 - - [14/Jul/2024 21:48:00] "GET / HTTP/1.1" 200 -

On the SigNoz Dashboard navigate to Logs and verify if the logs have been successfully sent to SigNoz.

Learn more about different ways you can use to send logs to SigNoz here.

Conclusion

- Logging is a crucial component of any application, providing transparency and valuable insights. It enables developers to identify and trace issues by monitoring the code’s execution, like following a breadcrumb trail.

- An instance in brief is a single, unique object created from a class, in this context, we define an instance app of the class

Flask. - A handler defines where and how log messages should be output. They are also responsible for storing, displaying as well as sending log records (messages) to specified destinations.

- Multiple loggers are crucial in web applications for handling various types of messages and sending them to the right places. They help organize log entries based on their importance, which makes it easier to debug and monitor what's going on.

- Web applications often require logs to contain specific information such as user IDs, request paths, session data, or custom tags. For this purpose, we require a custom formatter that can customize the formatting logic beyond the default capabilities, giving you more control over the log message format.

- While formatters provide a basic structure, structured logging goes further by logging data in a structured format like

JSONor key-value pairs usingstructlog

FAQs

Where are Flask Logs Stored?

Flask logs are typically streamed to the standard output (stdout) of the environment where Flask is running. They can also be directed to files or other logging handlers configured by the developer.

What Logger Does Flask Use?

Flask itself uses the Python logging module, which is a general-purpose logging framework. There isn't a specific logger exclusively for Flask; developers typically configure and use the standard Python logging capabilities to handle logging in Flask applications. This allows for flexibility in configuring loggers, handlers, and formatters according to specific application requirements.

What is The Default Level of Logger in Flask?

The default logging level in Flask is WARNING. This means that only messages of WARNING level and higher (such as ERROR and CRITICAL) will be logged by default.

How Do I Disable Default Logging in Flask?

To disable Flask's default logging completely, you can set the logging level to a higher value than CRITICAL, such as ERROR, in your Flask application's configuration. Another way is to remove or modify the default logging configuration to suit your specific needs.