Docker containers have revolutionized application deployment and management. However, understanding what's happening inside these containers can be challenging. One crucial aspect of container management is logging processes — but how do you effectively track all the processes running inside a Docker container?

This guide will walk you through various methods to log processes in Docker containers, from basic commands to advanced techniques. You'll learn why process logging is essential, how to implement different logging strategies, and how to leverage tools like SigNoz for enhanced monitoring.

Understanding Docker Container Processes

Docker containers offer a highly efficient way to run isolated applications by encapsulating processes with their dependencies into a self-contained unit. Unlike traditional virtual machines (VMs), which include a full operating system (OS) for each instance, containers share the host system's OS kernel while maintaining their isolation. This design provides several advantages, such as lower resource overhead, faster startup times, and more efficient scaling. However, it also introduces important nuances in how container processes function compared to those on the host system.

Key differences include:

- Namespace isolation: One of the core features of Docker containers is their use of namespaces to isolate processes. Namespaces provide each container with its own isolated view of the system, meaning that processes running inside a container cannot directly interact with processes or resources outside of it.

- Resource constraints: In addition to isolation, Docker containers allow for precise control over system resource usage, such as CPU, memory, disk I/O, and network bandwidth. This is achieved through cgroups (control groups), which allow Docker to set limits on CPU, memory, disk I/O, and network bandwidth by allocating specific portions of system resources to each container, ensuring they do not exceed predefined thresholds.

- Process hierarchy: In Docker, each container typically runs a main process, also referred to as the init process, which is assigned a PID (Process ID) of 1. This main process is critical because, in Docker’s process model, when the main process (PID 1) exits, the entire container stops. While Docker containers are often associated with running a single main process, it’s important to note that this process can spawn multiple subprocesses within the container. These subprocesses have their own unique PIDs but remain dependent on the lifecycle of the main process. If the main process exits, all its subprocesses are also terminated, and the container shuts down.

Understanding these differences is vital for effective monitoring and logging of container processes.

Why Log Processes in Docker Containers?

Logging processes inside Docker containers is a crucial practice for ensuring your applications' reliability, performance, and security. Containers are often used to deploy services in dynamic environments, making them lightweight and portable. However, the isolated nature of containers presents unique challenges in monitoring and managing the processes running inside them. By logging and tracking container processes, you can gain valuable insights into how your applications behave, troubleshoot issues efficiently, and optimize resource usage.

Enhanced visibility Docker containers encapsulate processes, creating an isolated environment where applications run independently from the host system. While this isolation benefits security and consistency, it can also obscure visibility into what’s happening inside the container. Without proper logging, it’s difficult to know how your application performs or if anything is going wrong. Process logging provides a clear view of all the activities happening within the container. By capturing detailed logs, you can:

- See which processes are running at any given time.

- Monitor process start times, resource consumption, and completion.

- Understand the sequence of operations within the container.

This visibility is particularly useful in complex environments where multiple containers run different services. Process logs give you a window into the behaviour of each container, allowing you to identify potential bottlenecks or issues early.

Troubleshooting Process logs are one of the first places to look for clues when something goes wrong in a containerised application. Because containers are often ephemeral (i.e., they can be spun up, scaled, or removed quickly), tracking down the root cause of a problem can be challenging without logs. Logging processes help in several ways during troubleshooting:

- Identifying abnormal process behaviour: If a process consumes too much memory, uses excessive CPU, or behaves unpredictably, process logs can help pinpoint when and why it happened.

- Diagnosing container crashes: Since containers stop when the main process exits, logs can reveal whether the process exited due to an error, a resource limitation, or other issues.

- Tracking failed operations: Logs can capture details about failed operations or tasks within the container, providing context for debugging and recovery.

Performance optimization One of the main advantages of utilising Docker containers is efficient resource utilisation. To guarantee peak performance, it's crucial to continuously check resource utilisation in production settings. You can gauge the CPU, memory, disk I/O, and network bandwidth each process consumes by tracking metrics rather than relying on logs. With this information, you can:

- Optimize resource allocation: If a process consistently consumes more resources than expected, you can adjust the container's resource limits (using cgroups) to ensure better performance across the entire system.

- Identify inefficient processes: Process logs can reveal processes that may be hogging resources, running inefficiently, or causing performance bottlenecks. For example, if a background process uses too much CPU, you can optimize or scale it accordingly.

- Plan for scaling: By tracking resource consumption over time, you can predict when additional containers or resources will be needed to handle increasing workloads. This helps in scaling your applications effectively while maintaining performance standards.

AutoScaling and Security

Logging processes in Docker also help with autoscaling strategies, ensuring that your containerized applications are responsive to changes in demand without overloading the system.

Security: Security is a top priority in any production environment, and containers add an extra layer of complexity due to their isolated nature. While containers provide a degree of isolation, they are not immune to security threats. Unusual processes running inside a container may indicate malicious activity, such as an attempted breach or exploiting a vulnerability.

Process logging plays a critical role in security monitoring by:

- Detecting unauthorized processes: If a process starts unexpectedly within a container, it could indicate an intrusion. Logs help you identify these rogue processes and respond quickly.

- Monitoring for privilege escalation: Some attacks involve gaining higher privileges within the container or host system. Process logs can track changes in privilege levels and alert you to suspicious activity.

- Maintaining compliance: Regulations in numerous businesses mandate thorough system activity logs, including container processes. Logging ensures you comply with these requirements by keeping track of all the processes operating in your containers.

By implementing robust process logging, you can ensure your containerized applications run smoothly and securely.

Methods to Log Processes in Docker Containers

There are several approaches to logging processes in Docker containers. Let's explore the most common and effective methods.

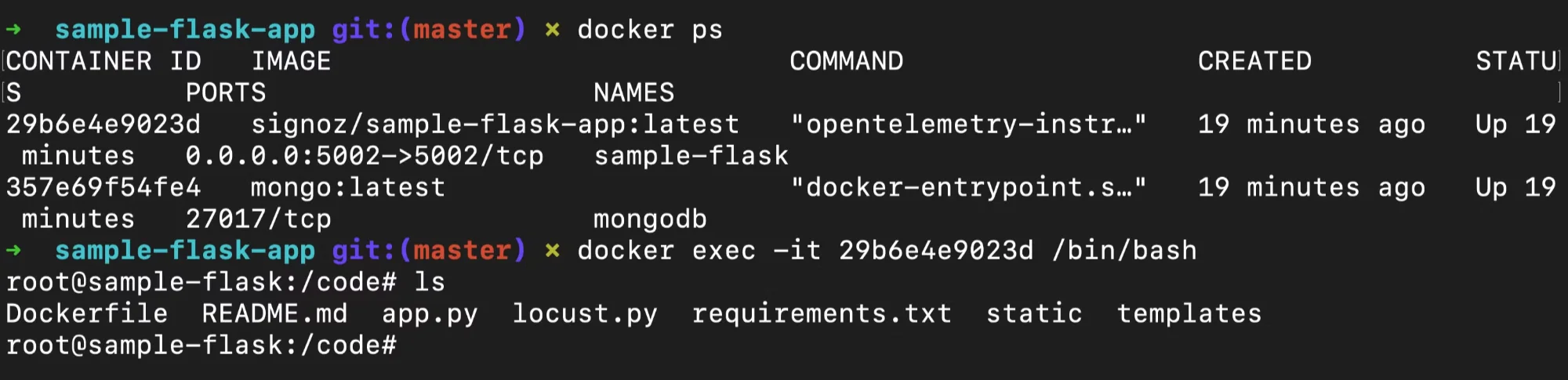

Using the 'ps' Command

One of the simplest and most widely used methods to view running processes in a Docker container is by using the ps command. This command provides a snapshot of the current processes running within the container.

Access the container's shell: To enter a container and execute commands, use

docker exec.docker exec -it <container_name> /bin/bash

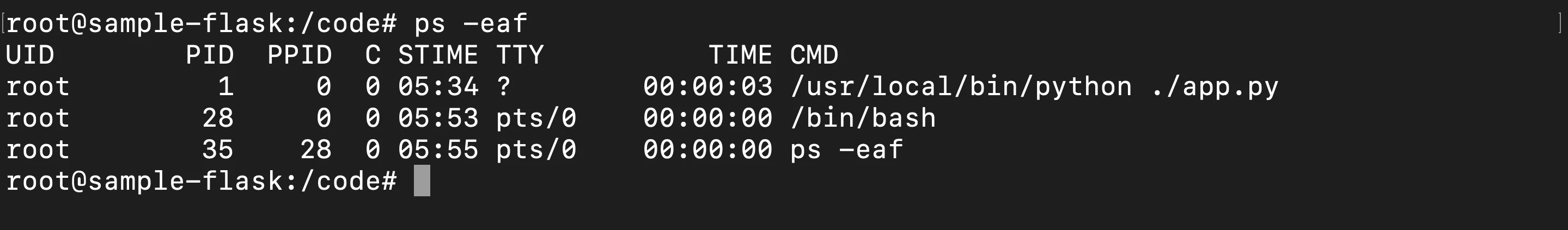

/bin/bashprovides an interactive shell interface and script execution environment within Docker containers, enabling command execution and process control.Run the 'ps' command: Once inside the container, execute the following:

ps -eafThe

ps -eafcommand provides a comprehensive view of all processes running inside the Docker container, including detailed process information, which aids in monitoring and performance optimization.

This will list all active processes within the container, including important details such as:

- UID: User ID under which the process is running

- PID: Process ID

- PPID: Parent Process ID

- C: CPU usage

- STIME: Start time of the process

- TTY: Terminal type

- TIME: Amount of CPU time the process has consumed

- CMD: Command that initiated the process

Limitations:

- Manual Execution: This method requires manual execution, making it less ideal for continuous monitoring.

- Snapshot View: It only provides a snapshot of processes at a specific moment, lacking historical data or continuous tracking.

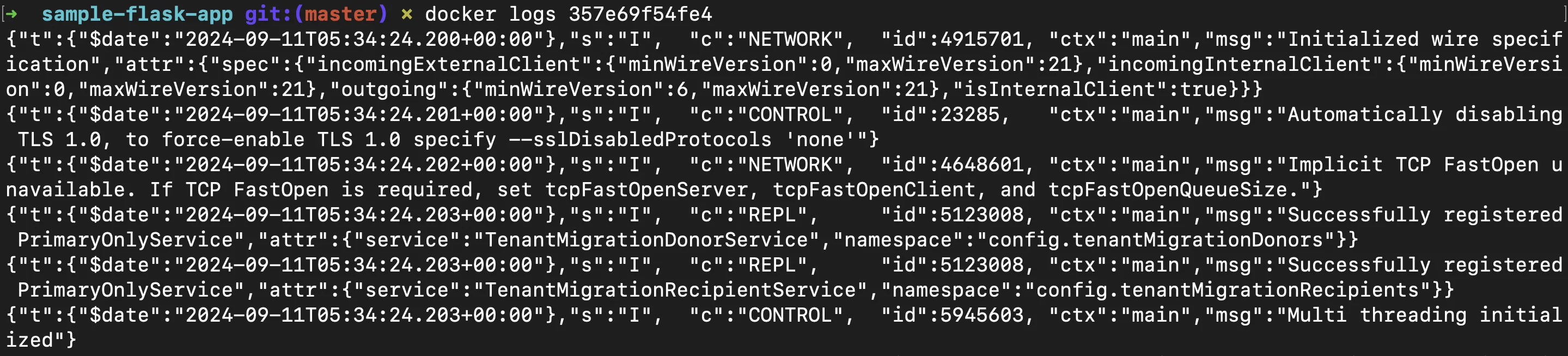

Docker's Built-in Logging

Docker's built-in logging drivers automatically capture logs from containers, streamlining the process of collecting output without requiring manual intervention. Commonly used logging drivers like json-file or syslog can store and manage logs, but they focus primarily on capturing the standard output and error streams of the running containerized processes.

Configure Docker logging driver: When starting a container or globally, you can configure a specific logging driver through the Docker daemon configuration file (

/etc/docker/daemon.json). For example, to use thejson-filelogging driver with rotation options:{ "log-driver": "json-file", "log-opts": { "max-size": "10m", "max-file": "3" } }Restart Docker daemon: After modifying the configuration, restart the Docker service to apply the changes:

sudo systemctl restart dockerThis ensures that all new containers will log their output according to the new driver and options.

View logs: To view the logs from a running container, use the

docker logscommand:docker logs <container_name>This command will display the logs from the container’s standard output and standard error streams.

Key Considerations:

- Focus on Application Output: Docker's native logging primarily captures standard output and error streams, rather than detailed system or process-level metrics like CPU usage or process IDs.

- Log Rotation: Configuring log rotation prevents disk space exhaustion by limiting the size and number of log files.

- Limited Process Insight: By default, these logs won’t include deep insights into individual process behaviour, requiring additional tools or methods (e.g.,

pscommand, monitoring platforms) to gather detailed process information.

Docker's native logging is ideal for basic application monitoring but may need to be complemented with other tools for more granular process tracking.

Step-by-Step Guide: Logging Processes in a Docker Container

Implementing a comprehensive logging solution for processes running in a Docker container can provide continuous insights into container activity. Below is a simple, customizable approach to logging processes using a wrapper script that logs process information regularly.

Create a wrapper script: This script will run your main application and log the container's active processes to a file.

#!/bin/bash # Log initial processes echo "Initial processes:" >> /var/log/container_processes.log ps -eaf >> /var/log/container_processes.log # Start your main application /path/to/your/app & # Continuously log processes every minute while true; do echo "$(date): Current processes:" >> /var/log/container_processes.log ps -eaf >> /var/log/container_processes.log sleep 60 # Log every minute doneModify your Dockerfile: Next, update your Dockerfile to include the wrapper script and ensure it's executable.

# Replace "your-base-image" with the actual image you need. FROM your-base-image # Copy the wrapper script into the container COPY wrapper.sh /wrapper.sh # Make the wrapper script executable # The chmod command changes file permissions; # +x makes the file executable. RUN chmod +x /wrapper.sh # Set the default command to run the wrapper script CMD ["/wrapper.sh"]Build and run your container: Now that the Dockerfile is set up, build and run your container.

Build the Docker image: This command is used to build an image from a Dockerfile in the current directory

(.). The-tflag tags the image with a name. Here,<my-logging-container>is a placeholder for the image name.docker build -t <my-logging-container> .Run the container in detached mode:

docker run -d <my-logging-container>

Accessing the Logs The container logs processes every minute into the

/var/log/container_processes.logfile. To access the log file:Enter the container’s shell:

docker exec -it <container_name> /bin/bashView the log file:

cat /var/log/container_processes.log

This setup allows you to log and monitor all active processes running in your container over time, offering a more detailed view than a simple snapshot with a single ps command.The log file container_processes.log will update automatically without concurrency issues as long as only one process writes to it; for multiple processes, file locking should be implemented to avoid conflicts.

Advanced Techniques for Process Logging

Basic logging is essential for monitoring, but advanced strategies can offer deeper insights, more control, and greater efficiency in managing your containerized applications. Below are some advanced techniques that can enhance your process logging efforts:

Use process managers: In more complex container environments, managing multiple processes efficiently is critical. While Docker containers typically run a single process, there are situations where you may need to run multiple processes inside the same container. You can use a process manager like Supervisor to manage these processes effectively. Supervisor is a powerful tool for controlling and monitoring multiple processes within a container. It allows you to:

Automatically restart failed processes: If a process inside the container crashes, Supervisor can detect the failure and restart it automatically, ensuring high availability.

Eg. In your

supervisord.conffile, you can configure the process to restart automatically with the following directives:# Starts the process when Supervisor starts autostart=true # Automatically restarts the process if it crashes autorestart=trueLog process output: Supervisor provides built-in logging features, capturing each managed process's standard output and error logs, which you can use for troubleshooting and performance analysis.

Eg. You can configure the log files for each process in the

supervisord.conffile:# Logs standard output stdout_logfile=/var/log/supervisor/my_app.out.log # Logs error output stderr_logfile=/var/log/supervisor/my_app.err.logSupervisor is better than

ps -eafbecause it actively manages processes by automatically restarting them if they fail and provides detailed logs for troubleshooting, whileps -eafonly lists processes without offering control or monitoring capabilities.Control process lifecycle: You can configure Supervisor to start, stop, or restart processes based on predefined rules, helping you maintain control over container behavior.

# Start automatically when Supervisor starts autostart=true # Restart automatically if the process crashes autorestart=true # Number of attempts to restart the process startretries=3 # Consider the process started after 5 seconds startsecs=5

Let's walk through an example of using Supervisor to manage processes inside a Docker container.

Clone the Sample Application Repository: First, clone a sample Python application:

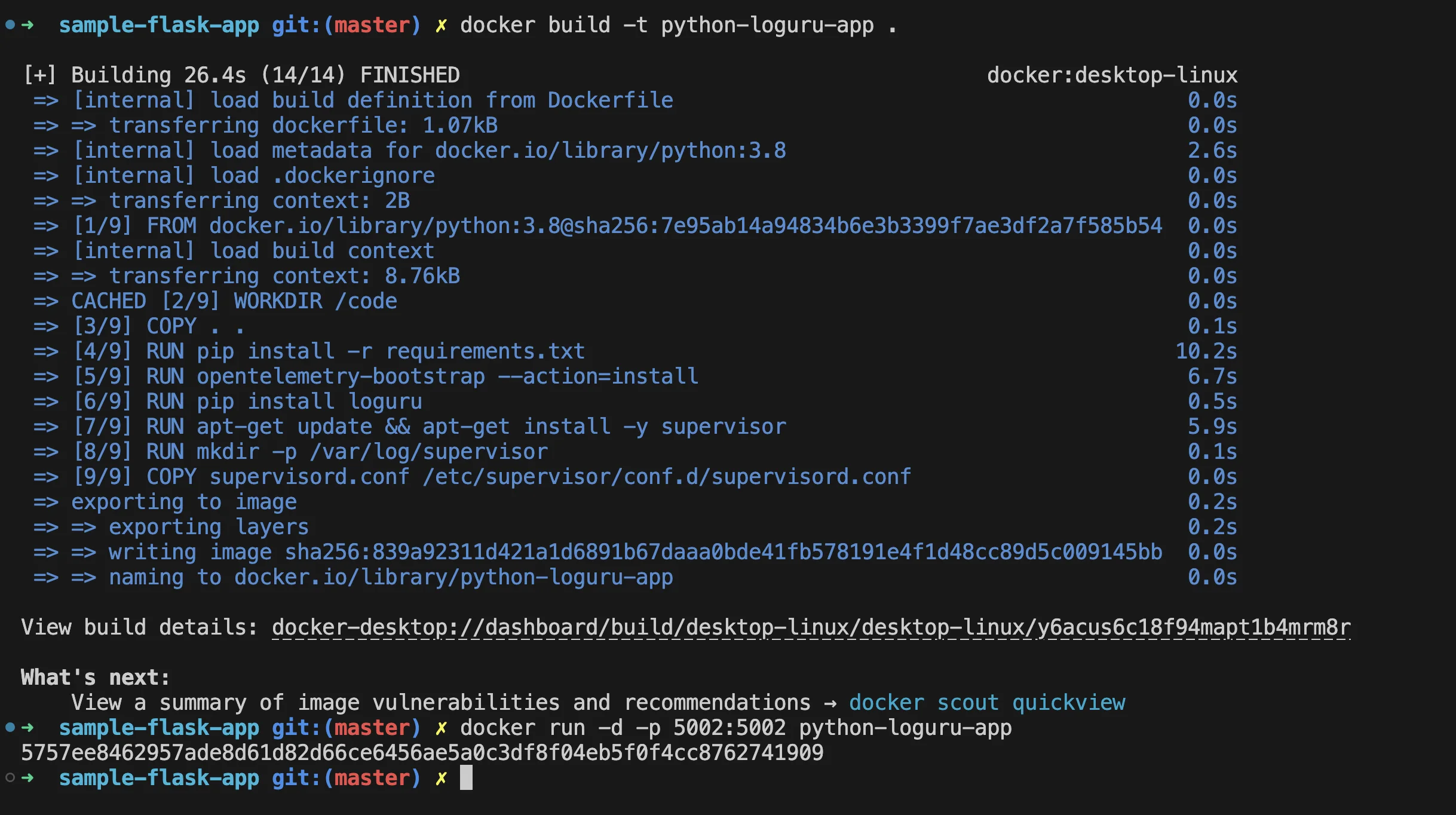

git clone https://github.com/SigNoz/sample-flask-appUpdate the Dockerfile: Modify the

Dockerfileto include Supervisor and configure it to manage your application:# install Supervisor RUN apt-get update && apt-get install -y supervisor # Create Supervisor directory for logs RUN mkdir -p /var/log/supervisor # Copy Supervisor config file COPY supervisord.conf /etc/supervisor/conf.d/supervisord.conf # expose the application's port EXPOSE 5002 # Set the entry point to run Supervisor (which will manage your app) CMD ["/usr/bin/supervisord", "-c", "/etc/supervisor/supervisord.conf"]Create a Supervisor Configuration File: Create a

supervisord.conffile in the same directory as your Dockerfile to configure Supervisor to manage your Python app.[supervisord] nodaemon=true [program:python_app] command=opentelemetry-instrument python ./app.py autostart=true autorestart=true stderr_logfile=/var/log/supervisor/python_app.err.log stdout_logfile=/var/log/supervisor/python_app.out.log startretries=3 startsecs=5Build and Run the Docker Container

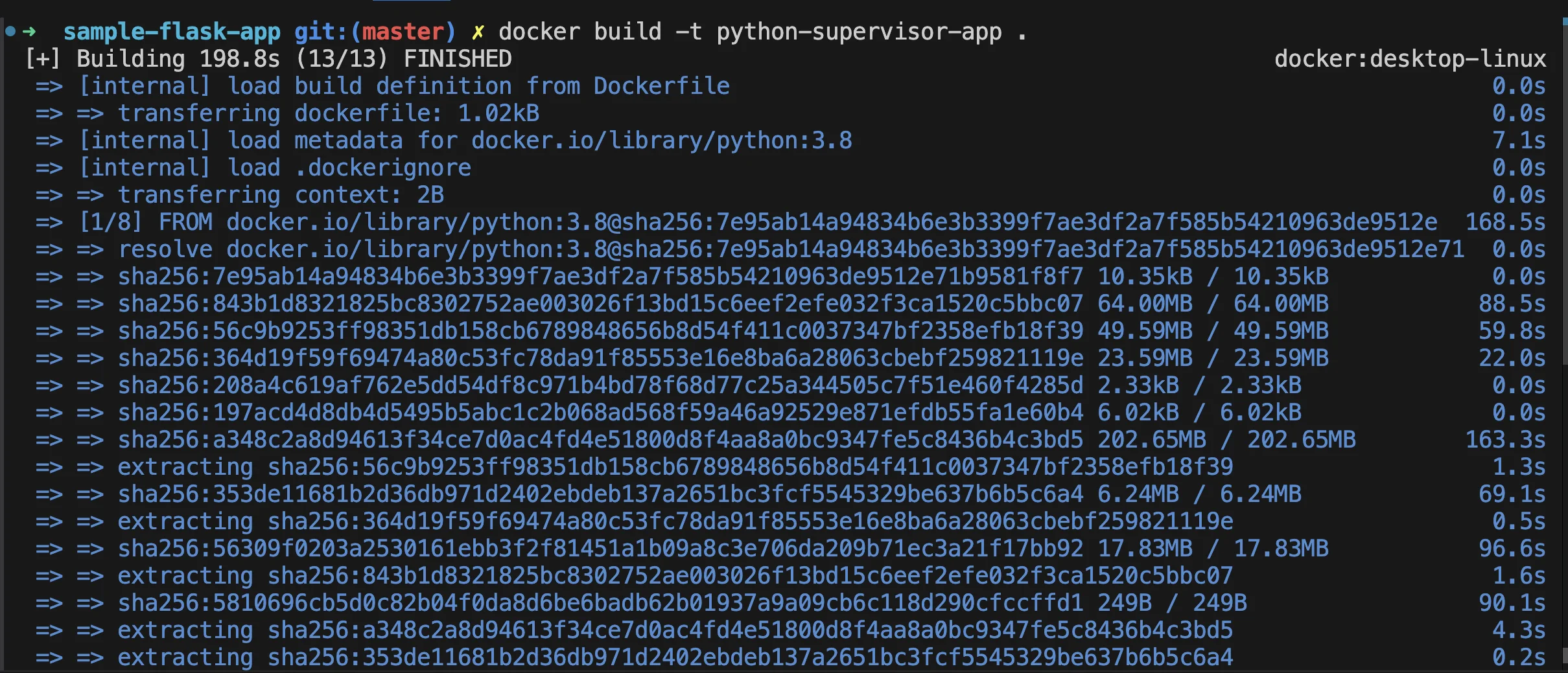

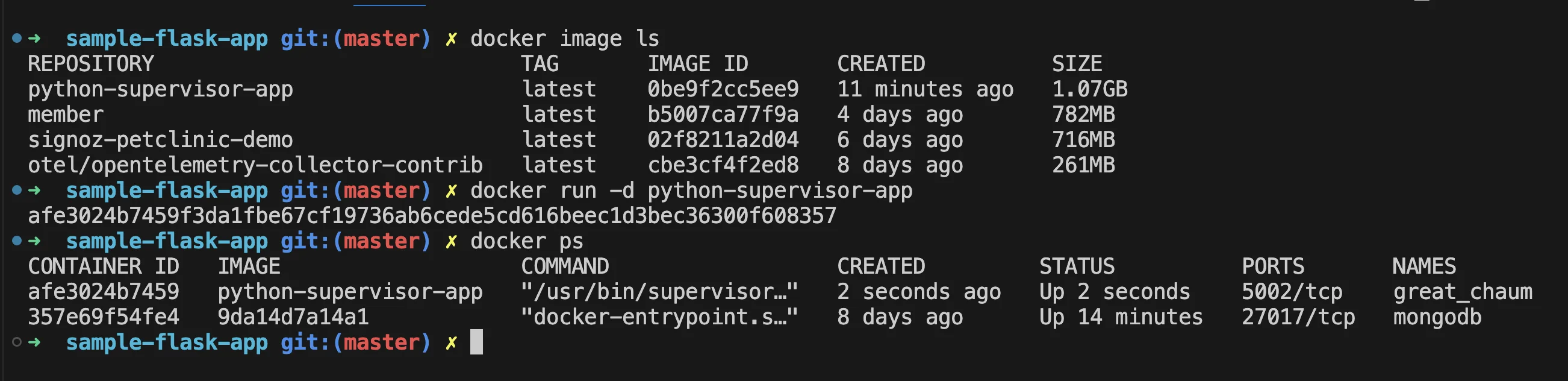

- Build the Docker image:

docker build -t python-supervisor-app .- Run the Docker container:

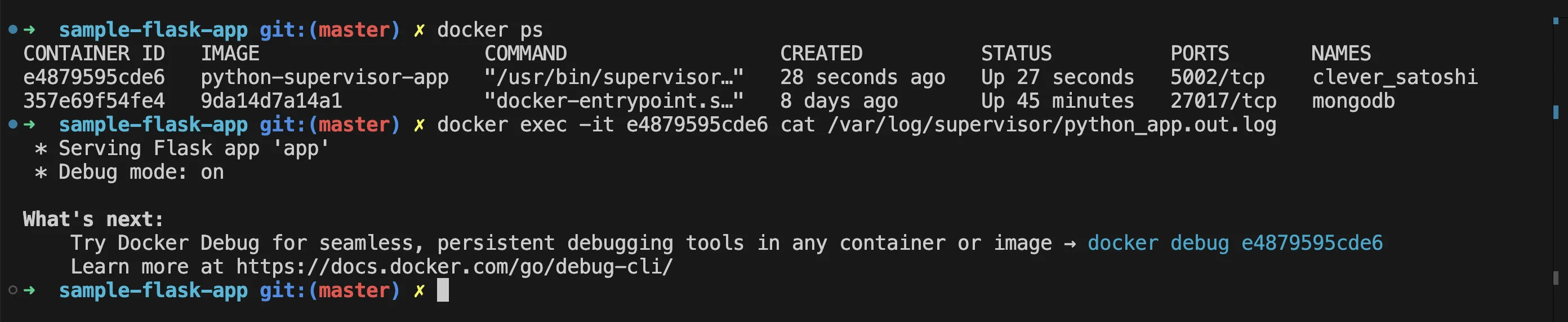

docker run -d python-supervisor-app5. Where to See Supervisor Logs: You can access Supervisor logs directly within the container:

Application logs:

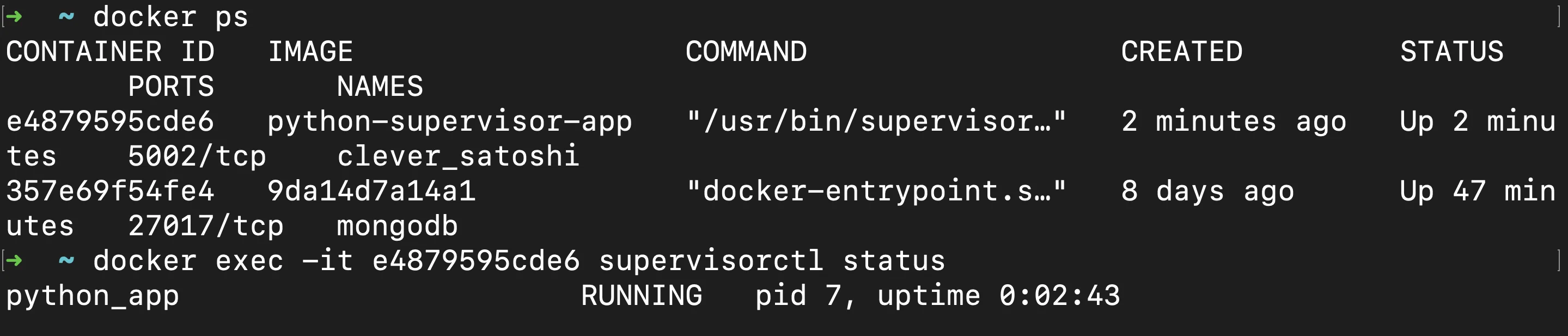

docker exec -it <container_id> cat /var/log/supervisor/python_app.out.log2. Process status:

docker exec -it <container_id> supervisorctl statusUsing a process manager like Supervisor is particularly useful in environments requiring high reliability and more granular control over process execution within containers.

Implement custom logging libraries: While Docker provides basic logging mechanisms, integrating custom logging libraries into your application code can give you more detailed and tailored process logging. By adding logging directly into your application, you gain several benefits:

- Granular logging: Custom logging allows you to log specific events, variables, and process states that are important for your application. This can include detailed logs about internal operations, performance metrics, or application-specific errors.

- Structured logging: Libraries like Logrus (for Go) or Winston (for Node.js) allow you to create structured logs, which are easier to parse and analyze. Structured logs can include key-value pairs, timestamps, and contextual information that make troubleshooting much faster.

- Enhanced log formats: With custom libraries, you can log in formats like JSON, making integrating with log management tools or dashboards easier.

- Application-level insights: By embedding logging into your code, you can capture information unavailable through system-level logging. For example, you can track specific user actions, API calls, or business logic execution paths.

Sample:

Let's walk through an example of integrating a custom logging library to handle log management.

Clone the Sample Application Repository: First, clone a sample Python application:

git clone https://github.com/SigNoz/sample-flask-appChoose a Custom Logging Library: For this example, we'll use

loguru, a popular logging library that offers a more flexible and user-friendly setup than Python's built-inloggingmodule.Update Your Application Code: Integrate the custom logging library into your application code. For this example, we will add it to our

app.pyfile.Install

loguru:Ensureloguruis installed via pip:pip install loguruUpdate Your

app.py- Add the following imports:

from loguru import logger- Configure

loguruat the top of your file:

# Configure loguru logger.add("logs/app.log", rotation="1 week", level="DEBUG", retention="1 month")Add logging statements to your existing routes and functions:

Log request information and exceptions:

@app.route("/list") def lists(): logger.info("Request to /list endpoint") try: # Your existing code... except requests.RequestException as e: logger.error(f"Request failed: {e}")Update other routes similarly to log information and errors:

@app.route("/done") def done(): logger.info("Request to /done endpoint") try: # Your existing code... except Exception as e: logger.error(f"Error updating task: {e}")Ensure the application start is logged:

if __name__ == "__main__": logger.info("Starting Flask application") app.run(host='0.0.0.0', port=5002, debug=True)

Update

requirements.txt:

Ensure your

requirements.txtincludesloguruflask loguru # add this opentelemetry-api opentelemetry-sdk opentelemetry-instrumentation-flaskUpdate Your Dockerfile: Modify your Dockerfile to include the custom logging library and update your application to use it.

# Install loguru library RUN pip install loguruBuild and Run the Docker Container: Rebuild your Docker image and run the container:

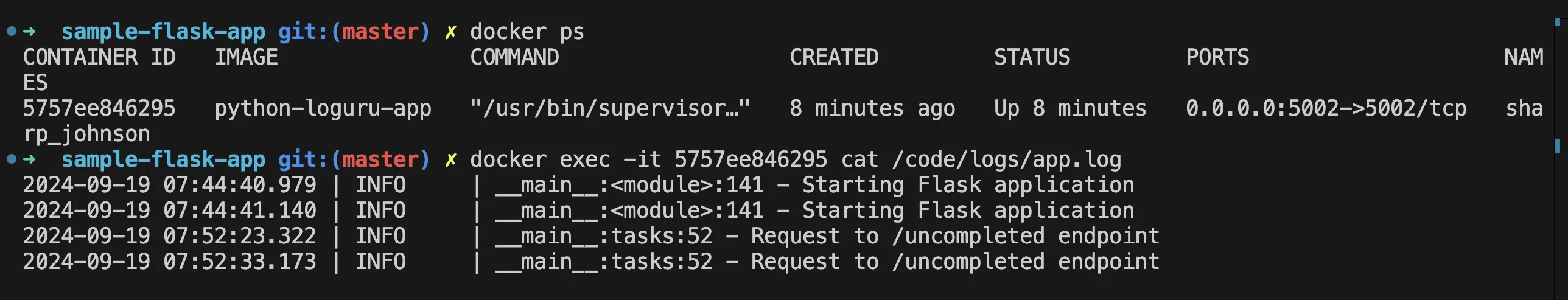

docker build -t python-loguru-app . docker run -d -p 5002:5002 python-loguru-app1. Accessing Logs: To view the logs generated by

loguru, Access container logs:docker exec -it <container_id> cat /code/logs/app.logIntegrating logging libraries into your applications is a good approach for teams that need precise control over their logs and want to capture application-specific data.

Leverage container orchestration Managing and logging processes manually in large-scale deployments with multiple containers can quickly become overwhelming. This is where container orchestration platforms like Kubernetes come into play. Kubernetes offers advanced features for managing and monitoring containerized environments, including robust logging capabilities.

Key advantages of using Kubernetes for process logging include:

- Centralized logging: Kubernetes can centralize the logs from all containers across your cluster, making monitoring and analysing logs from a single location easier. This is achieved through integrations with logging solutions like ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

- Advanced monitoring: Kubernetes works smoothly with monitoring tools such as Prometheus and Grafana, allowing you to gather, visualise, and analyse process information. You can configure alerts based on resource use, process failures, and other relevant data.

- Automatic log rotation: Kubernetes helps manage logs by automatically rotating them to prevent containers from running out of disk space. This ensures that logging doesn’t become a burden on container performance.

- Scalability: Kubernetes excels at managing containerized applications at scale. Its logging and monitoring features can scale as your deployment grows, ensuring consistent visibility across all your containers.

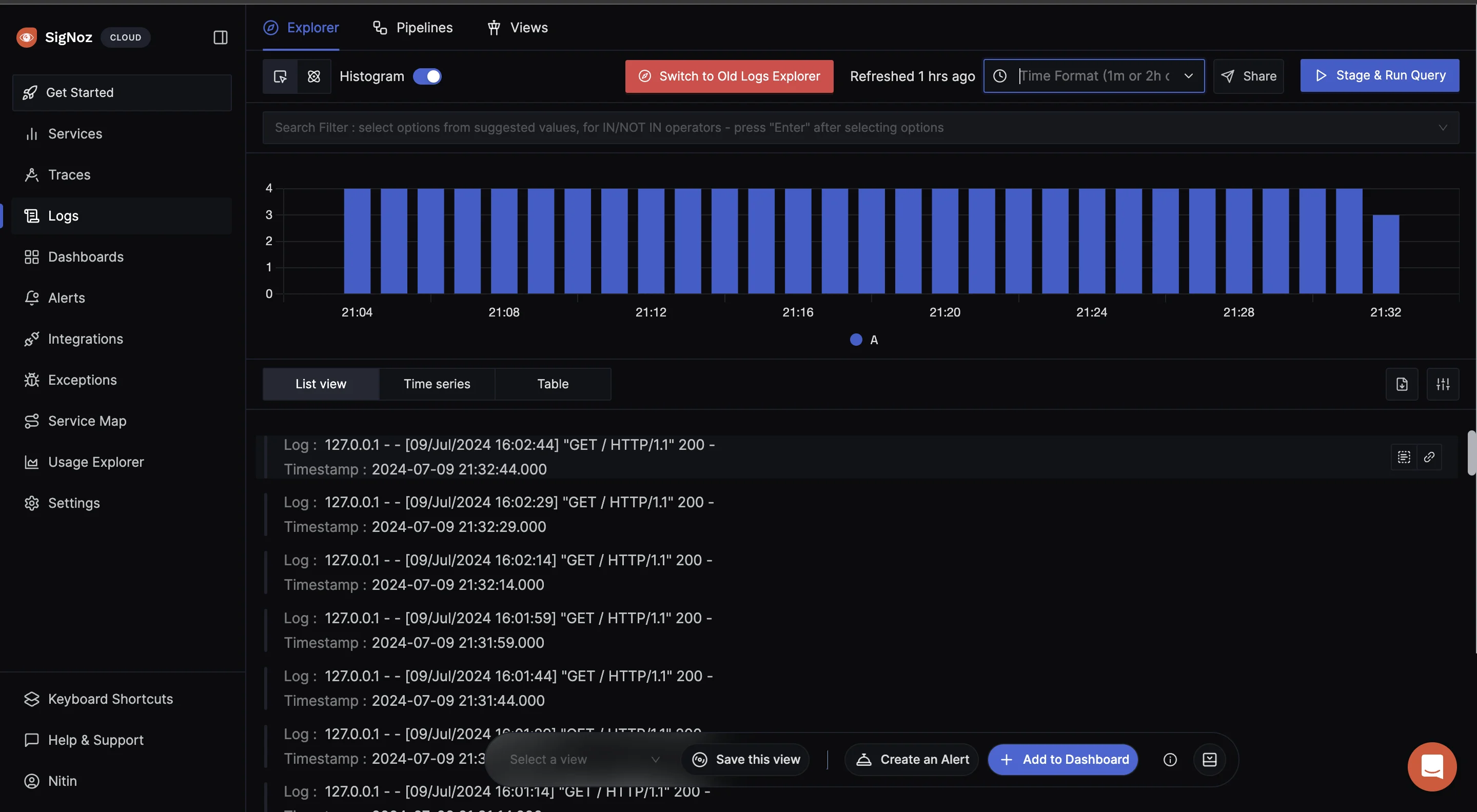

Monitoring Docker Container Processes with SigNoz

To effectively monitor Docker logs and overcome the limitations of the docker logs -f command, using an advanced observability platform like SigNoz can be highly beneficial. SigNoz is an open-source observability tool that provides end-to-end monitoring, troubleshooting, and alerting capabilities for your applications and infrastructure.

Here's how you can leverage SigNoz for monitoring Docker logs:

- Set Up your Docker Containers

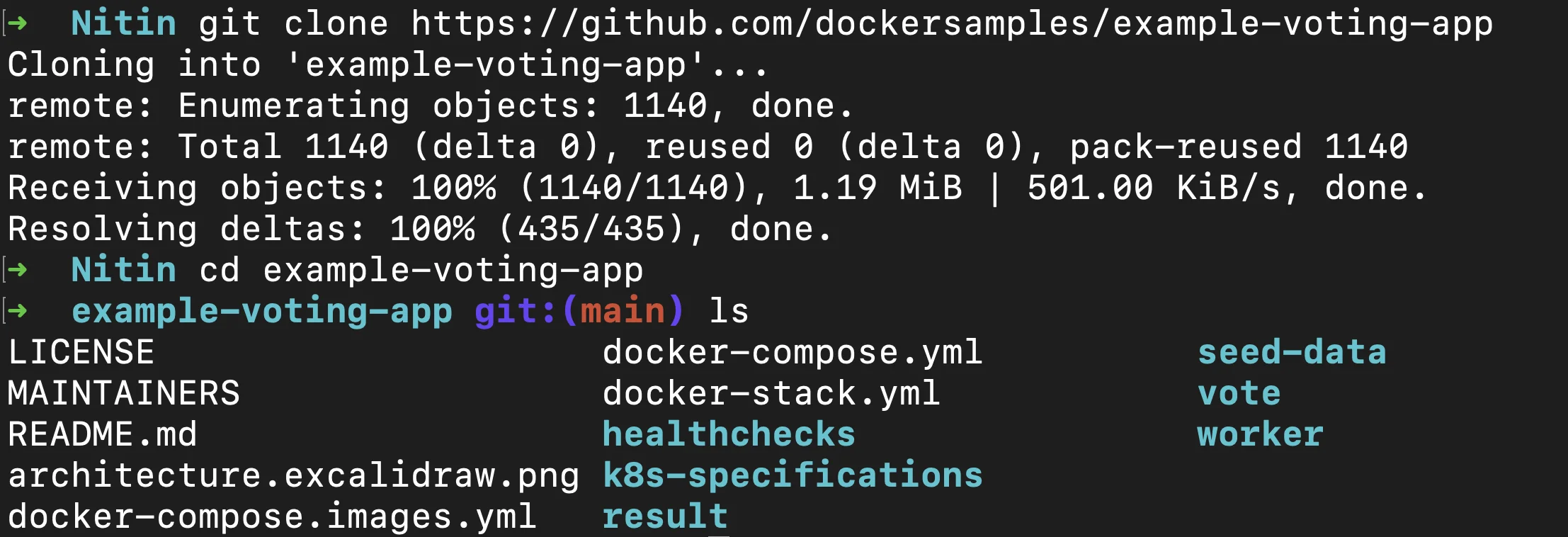

A project folder has been provided with Docker Compose configuration that sets up a sample application. Clone the repository and navigate to the project directory.

git clone https://github.com/dockersamples/example-voting-app

cd example-voting-app

Git commands to clone the repo

Note: Skip this step if you have your own running Docker containers and Docker Compose configuration.

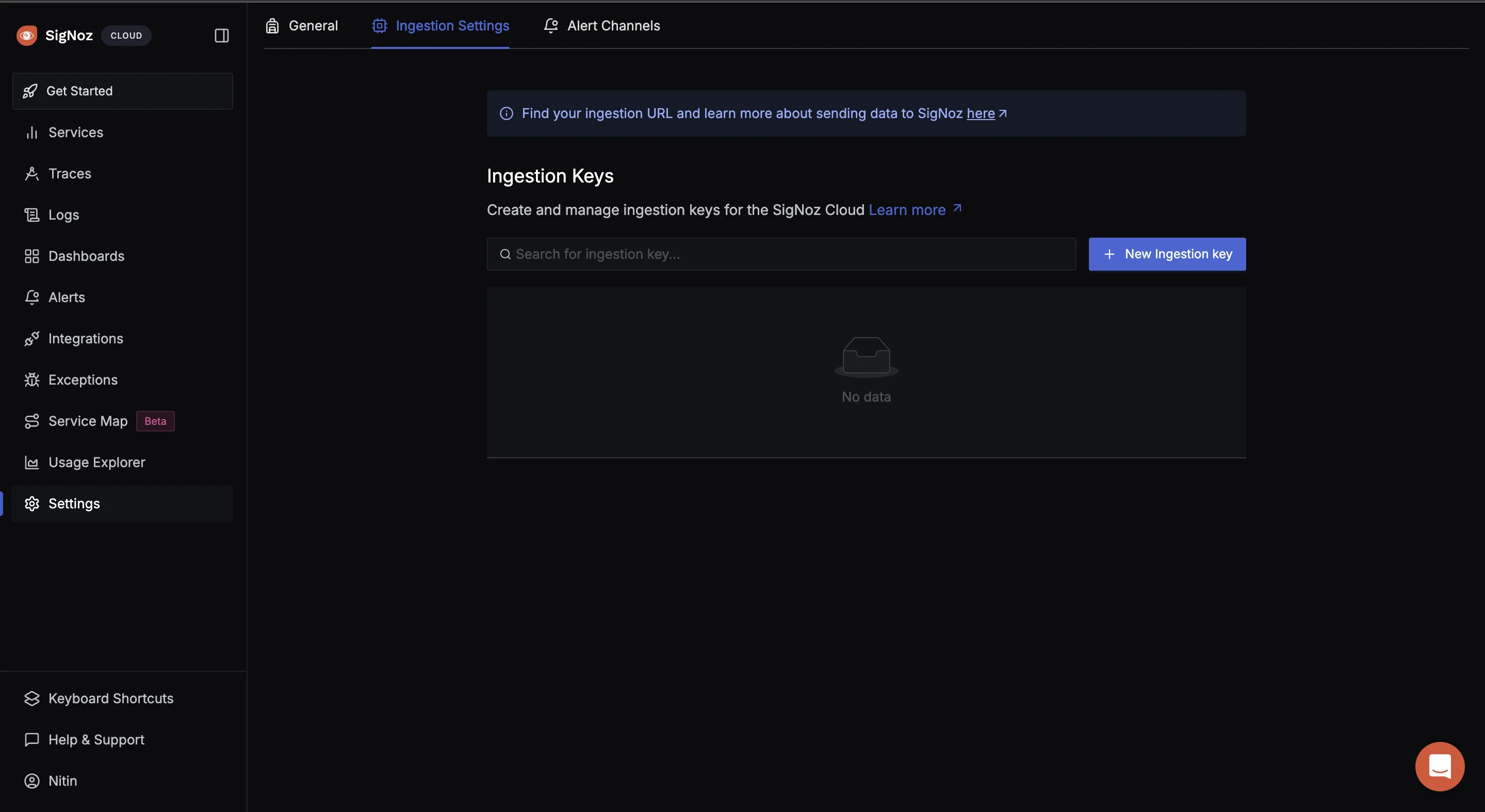

- Create a SigNoz Cloud Account

SigNoz Cloud provides a 30-day free trial period. This demo uses SigNoz Cloud, but you can choose to use the open-source version.

- Add .env File to the Root Project Folder

In the root directory of your project folder, create a new file named .env and paste the below into it:

OTEL_COLLECTOR_ENDPOINT=ingest.{region}.signoz.cloud:443

SIGNOZ_INGESTION_KEY=***

OTEL_COLLECTOR_ENDPOINT: Specifies the address of the SigNoz collector where your application will send its telemetry data.SIGNOZ_INGESTION_KEY: Authenticates your application with the SigNoz collector.

Note: Replace {region} with your SigNoz region and SIGNOZ_INGESTION_KEY with your actual ingestion key.

To obtain the SigNoz ingestion key and region, navigate to the “Settings” page in your SigNoz dashboard. The ingestion key and region are under the “Ingestion Settings” tab.

- Set Up the OpenTelemetry Collector Config

An OTel Collector is a vendor-agnostic service that receives, processes, and exports telemetry data (metrics, logs, and traces) from various sources to various destinations.

In the root of your project folder, create a file otel-collector-config.yaml and paste the below contents in it:

receivers:

tcplog/docker:

listen_address: "0.0.0.0:2255"

operators:

- type: regex_parser

regex: '^<([0-9]+)>[0-9]+ (?P<timestamp>[0-9]{4}-[0-9]{2}-[0-9]{2}T[0-9]{2}:[0-9]{2}:[0-9]{2}(\\.[0-9]+)?([zZ]|([\\+-])([01]\\d|2[0-3]):?([0-5]\\d)?)?) (?P<container_id>\\S+) (?P<container_name>\\S+) [0-9]+ - -( (?P<body>.*))?'

timestamp:

parse_from: attributes.timestamp

layout: '%Y-%m-%dT%H:%M:%S.%LZ'

- type: move

from: attributes["body"]

to: body

- type: remove

field: attributes.timestamp

- type: filter

id: signoz_logs_filter

expr: 'attributes.container_name matches "^signoz-(logspout|frontend|alertmanager|query-service|otel-collector|otel-collector-metrics|clickhouse|zookeeper)"'

processors:

batch:

send_batch_size: 10000

send_batch_max_size: 11000

timeout: 10s

exporters:

otlp:

endpoint: ${env:OTEL_COLLECOTR_ENDPOINT}

tls:

insecure: false

headers:

"signoz-ingestion-key": ${env:SIGNOZ_INGESTION_KEY}

service:

pipelines:

logs:

receivers: [tcplog/docker]

processors: [batch]

exporters: [otlp]

The above configuration sets up the OpenTelemetry Collector to listen on 0.0.0.0:2255 for incoming Docker logs over TCP and to parse and filter the logs using a regex parser. The logs are then processed in batches for optimized handling and exported securely via OTLP to the OTEL_COLLECTOR_ENDPOINT specified in your .env file.

- Add Services to the Docker Compose File

In your existing Docker Compose file, add the below configuration:

otel-collector:

image: signoz/signoz-otel-collector:${OTELCOL_TAG:-0.79.7}

container_name: signoz-otel-collector

command:

[

"--config=/etc/otel-collector-config.yaml",

"--feature-gates=-pkg.translator.prometheus.NormalizeName"

]

volumes:

- ./otel-collector-config.yaml:/etc/otel-collector-config.yaml

environment:

- OTEL_RESOURCE_ATTRIBUTES=host.name=signoz-host,os.type=linux

- DOCKER_MULTI_NODE_CLUSTER=false

- LOW_CARDINAL_EXCEPTION_GROUPING=false

env_file:

- ./.env

ports:

- "4317:4317" # OTLP gRPC receiver

- "4318:4318" # OTLP HTTP receiver

restart: on-failure

logspout:

image: "gliderlabs/logspout:v3.2.14"

container_name: signoz-logspout

volumes:

- /etc/hostname:/etc/host_hostname:ro

- /var/run/docker.sock:/var/run/docker.sock

command: syslog+tcp://otel-collector:2255

depends_on:

- otel-collector

restart: on-failure

The above Docker Compose configuration sets up two services:

otel-collector:- Uses the Signoz OpenTelemetry Collector image.

- Mounts the

otel-collector-config.yamlfile into the container. - Uses environment variables from the

.envfile for configuration. - Exposes ports

4317(OTLP gRPC receiver) and4318(OTLP HTTP receiver).

logspout:- Uses the

gliderlabs/logspoutimage to collect logs from other Docker containers. - Forwards logs to the

otel-collectorservice on port2255. - Depends on the

otel-collectorservice, ensuring it starts first so it can forward the logs to it.

- Uses the

- Start the Docker Compose file

Run the below command in your terminal to start the containers:

docker compose up -d

If it runs without any error, navigate to your SigNoz Cloud. Under the “Logs” tab, you should see the incoming logs.

Best Practices for Docker Container Process Logging

Effective process logging in Docker containers is key to ensuring your applications run smoothly and securely. However, to get the most out of your logging setup, following certain best practices is essential. These guidelines will help you balance the need for detailed information with maintaining performance and security.

- Balance Detail and Performance: When logging processes in Docker containers, capturing all relevant information is crucial without compromising system performance. Logging too much detail can generate excessive noise and slow down your application, while too little detail may make it difficult to troubleshoot issues. A balanced approach ensures that logs are informative but do not overwhelm the system or complicate analysis. Define clear logging levels (e.g., debug, info, error) and use them appropriately to control the verbosity of your logs based on your environment (e.g., development vs. production).

- Implement log rotation: Continuous logging can cause log files to grow significantly over time, which may result in excessive disk usage or even out-of-space errors. Log rotation helps mitigate this by regularly archiving or deleting older log files. Configure log rotation policies in Docker using the

max-sizeandmax-fileoptions forjson-filelogging driver or use external logging solutions likesyslog - Secure sensitive data Logs can unintentionally expose sensitive information such as credentials, tokens, or personal data. Ensure that your logging strategy is designed to avoid logging sensitive information or sanitize logs before they are stored or transmitted. Use environment variables and secrets management tools (e.g., Docker secrets, AWS Secrets Manager) to ensure that sensitive data is not logged, and enforce redaction rules if necessary.

- Standardize logging Consistent logging across all containers makes it easier to aggregate and analyze logs. Establish a standardized format that includes timestamps, severity levels, service identifiers, and other relevant metadata. This consistency ensures seamless integration with monitoring and alerting tools. Adopt structured logging formats such as JSON, which enables more sophisticated querying and log management across distributed systems.

- Centralized Log Management: In multi-container environments, having each container manage its own logs can become chaotic. Implement a centralized log management solution like SigNoz to collect logs from all containers in a single location, making it easier to monitor, analyze, and troubleshoot system-wide events.

- Monitor and Alert on Logs: Logging should not be passive; actively monitor logs for errors, warnings, or other significant events. Set up automated alerts to notify you of critical issues in real time. Use log monitoring tools to create alerts based on patterns or specific events, ensuring that you can respond quickly to operational issues or security incidents.

Troubleshooting Common Issues

While implementing process logging in Docker containers is essential for monitoring and troubleshooting, several common issues can arise. Understanding these challenges and knowing how to resolve them ensures that your logging setup remains reliable, efficient, and secure.

High log volume One of the most common problems in Docker container logging is the generation of large log volumes. This can occur due to excessive logging of minor events, high traffic, or long-running processes. Large log files can overwhelm your storage and degrade system performance if not properly managed.

How to Manage High Log Volume:

Log Rotation: As mentioned earlier, implementing log rotation prevents individual log files from growing too large. Docker provides built-in options for rotating logs based on size and number of files. Configuring these options ensures that your logs remain manageable:

docker run --log-opt max-size=10m --log-opt max-file=5 <my-container>This command limits each log file to 10 MB and retains a maximum of 5 log files per container, automatically archiving older logs. Reducing log volume ensures that logging remains sustainable and doesn’t affect your system’s performance.

Log Compression: Compressing older log files can significantly reduce the amount of storage used by archived logs. Many log rotation tools offer compression options, where old log files are compressed into formats like

.gzto save space.Filter Logs: Another approach to reducing log volume is to filter out non-essential log entries. You can achieve this by setting appropriate logging levels (e.g., ERROR, WARNING, INFO) and filtering out DEBUG or INFO-level logs in production environments, where they might not be necessary.

Reducing log volume ensures that logging remains sustainable and doesn’t affect your system’s performance.

Permission issues: In some cases, logging processes within a container might encounter permission issues. These issues typically arise when the container’s logging process or the user running the container does not have the required permissions to write to specific directories or log files. How to Resolve Permission Issues:

Container User Permissions: Make sure the process responsible for writing logs inside the container has the correct user permissions. By default, many containers run as the

rootuser, but for security reasons, you may want to run processes as non-root users. Ensure that the user or process inside the container has write permissions for the designated logging directory.You can adjust user permissions with the

USERdirective in your Dockerfile:USER myuserBind Mount Permissions: If you’re using bind mounts to store logs on the host machine, ensure that the directory on the host system has the correct permissions. The container may not be able to write logs if the directory is read-only or if the container’s user lacks the necessary access rights. You can adjust the permissions by modifying the file system’s permissions on the host or using the correct

chmodcommand.

Resource constraints: Logging, while valuable, consumes resources such as CPU, memory, and disk I/O. If the logging process is too resource-intensive, it can negatively impact the performance of your containerized application. This is especially concerning in resource-constrained environments or when running multiple containers on the same host.

How to Address Resource Constraints:

Monitor Resource Usage: Use monitoring tools like Docker’s built-in stats (

docker stats) or external monitoring solutions to track how much CPU, memory, and disk I/O the logging process is consuming. This can help you identify bottlenecks and determine whether the logging process is impacting your container’s performance.Optimize Log Frequency and Detail: Adjust the level of logging detail and the frequency of logs to minimize resource usage. For example, reduce the log verbosity (e.g., avoid DEBUG-level logs in production), or log only significant events rather than every small operation.

Limit CPU and Memory: You can restrict the CPU and memory usage of your container by setting resource limits in the Docker run command or in your container orchestration tool (like Kubernetes). This ensures that even if the logging process consumes resources, it won’t affect the overall performance of the application.

docker run --memory=256m --cpus="1.0" <my-container>This limits the container to 256 MB of memory and one CPU core, preventing it from using excessive resources for logging or other tasks.

By following these best practices and troubleshooting recommendations, you can keep your Docker containers' process logging system running smoothly.

FAQs

How often should I log processes in a Docker container?

The logging frequency depends on your specific requirements. Logging every 1 to 5 minutes for most applications strikes a good balance between obtaining valuable insights and minimizing performance overhead. You may need more frequent logging if your container handles high activity or sensitive operations. However, always consider the resource constraints of your environment when determining the appropriate logging interval.

Can logging all processes impact container performance?

Yes, extensive logging can consume significant CPU, memory, and disk resources, which might degrade container performance, especially in resource-constrained environments. It’s important to monitor the container’s resource usage through tools like docker stats or external monitoring platforms, and adjust the logging frequency or verbosity as needed to minimize any performance impact.

What's the difference between logging container processes and host processes?

Processes within a container operate in a separate, isolated namespace, meaning they have a limited view of the system. Host processes, on the other hand, run with access to the full system and all resources. Logging container processes provides insights into the activities occurring inside the isolated environment of the container, which may differ from processes observed on the host, due to this separation.

How can I automate process logging in Docker containers?

You can automate process logging in Docker containers using several methods:

- Wrapper Scripts: Create and run scripts alongside your main application to handle logging and process monitoring tasks automatically.

- Process Managers: Use process management tools like Supervisor to manage and monitor processes within your container while automating logs.

- Container Orchestration: Leverage platforms such as Kubernetes to centralize and automate logging for all containers in your environment, providing more advanced logging options and seamless integration with monitoring tools.

- Monitoring Tools: Utilize logging and monitoring solutions like SigNoz or the ELK Stack to automate log collection and analysis, reducing manual effort and increasing the depth of insights you can gather.

Automating process logging ensures consistent, real-time visibility into your container’s performance and behavior, enhancing your ability to troubleshoot and optimize your containerized applications.